Why we must stop treating AI models like search engines—and why professional AI Visibility requires a completely new mindset.

Anyone talking about “visibility in LLMs” today almost instinctively reaches for the old search engine metaphor. This pattern of thinking is understandable—but it is dangerous. It applies a principle of order to a world where that kind of order simply does not exist.

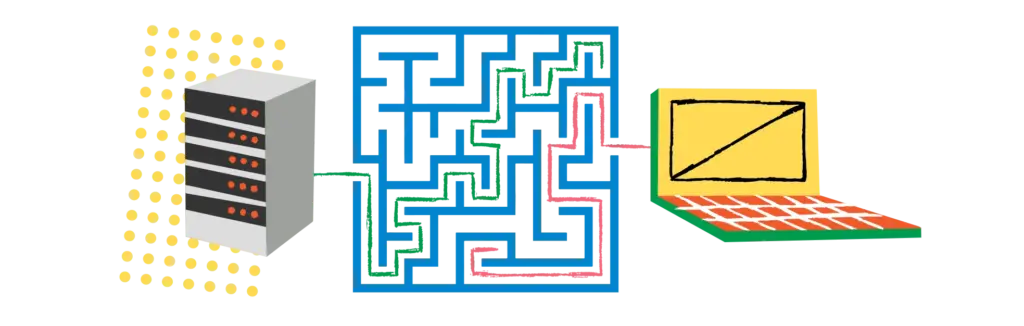

Search engines work with lists that express priorities. A “Rank 1” is an explicit, algorithmically generated judgment. An LLM, on the other hand, does not generate a list. It generates a stream of thought: token by token, based on probabilities, context, and the compression of internal knowledge.

The implication is fundamental: In AI automations and LLMs, there is no ranking. There is only recognition or hallucination.

This is where the misunderstanding of the current GEO (Generative Engine Optimization) model begins. It assumes visibility can be measured by counting how often a brand appears in the output. That is roughly equivalent to judging the quality of a novel by counting how frequently a character’s name appears—regardless of whether they are portrayed as the hero or the fool.

The central shift: Visibility in LLMs is not created by competing for attention. It is created by stable anchoring within the model’s knowledge space.

The Category Error: Retrieval Logic Fails in a Generative World

Most approaches in the digital marketing and SEO industry fail not because of technology, but because of their mental model. They treat a generative model as if it were a retrieval machine.

Retrieval Systems (Classic Search Engines) compare documents against each other. They rely on signals that express relative strength: relevance, authority, freshness. “Ranking” is the logical result—a selection of the best.

Generative Models do not operate relatively; they operate probabilistically. They do not select one source “ahead” of another. They synthesize probabilities.

The crucial distinction: An answer is not created by selection, but by compression.

If you try to measure visibility in ChatGPT or Gemini by simply counting brand mentions, you are measuring symptoms without understanding the pathology. This leads to two fatal assumptions:

The assumption that Mention = Visibility. But a brand can be mentioned while the model completely misunderstands it. Conversely, it can be omitted even if strong evidence exists.

The assumption that output metrics reflect internal knowledge structure. They do not. A model can give a superficially correct answer while internally linking the wrong entities, ignoring sources, or hallucinating numbers.

What most tools measure is merely reactive behavior, not knowledge architecture. But visibility in LLMs arises exclusively from the knowledge architecture.

Why Classic Monitoring Tools Only Scratch the Surface

Most current monitoring approaches follow a simple logic: Ask a question, analyze the output, and give points if the brand is mentioned. This measures what a model says, not why it says it.

The problem isn’t the effort; it’s the object of measurement itself. The output of an LLM is not a stable metric. It is probabilistic, context-dependent, and influenced by factors outside your control.

Output-based tools are blind to three structural issues:

Blindness to Evidence: A model can answer correctly while relying on a false or unreliable source.

Semantic Blindness: If a model assigns a CEO to the wrong company or confuses financial products, the output may look linguistically perfect—but it is factually wrong.

Numerical Blindness: Timelines, financial figures, regulatory quotas—these are areas where models systematically fail because they treat numbers as text patterns.

The key point: Output is a symptom. As long as we measure symptoms, we remain blind to the question that actually matters: Is the model accessing our structured data—or is it merely reproducing the noise of its training data?

The Four Dimensions of True AI Visibility

To understand how visibility is actually generated, we must look beneath the surface. Visibility is not about the answer text; it is about the internal logic of evidence and connection.

1. Attribution: The Identity Check

Is the brand recognized even without being named? Attribution is the anchor. It answers the question: Does the model know that a specific fact belongs to you, even if you aren’t explicitly mentioned? A model that correctly attributes a joint venture or a financial bond to your company shows true visibility. If it names a competitor instead, it reveals a lack of anchoring in your web design and branding signals.

2. Entity Resolution: The Relationship Check

Does the model keep the company graph stable? Hallucinations rarely come from nowhere; they come from wrongly connected entities. If a model confuses partners, shifts ownership percentages, or mixes up roles, the text might look fine, but the content is useless. This dimension marks the difference between “The model has heard of me” (Training Noise) and “The model understands my structure” (Semantic Anchoring).

3. Evidence Quality: The Proof Check

Can the model prove what it claims? An LLM that cannot name a source is worthless in medical marketing or corporate communication where precision is key. Plausibility is not a substitute for verifiability. Evidence quality checks if a model can cite specific documents, exact dates, or defined reports. A correct answer with a source is a proof of competence.

4. Temporal & Numerical Precision: The Fact Check

Does the model master time and numbers? The Achilles’ heel of all models lies in sequences and quantities. This is where a brand either remains stable in the probabilistic space or disappears into approximated patterns.

Why Structured Data is the Only Viable Foundation

If models do not create visibility via rankings but via semantic anchoring, the question shifts: What creates this anchoring?

Text alone cannot do it. Text is open to interpretation, and interpretation is the birthplace of error. For a model to make reliable statements about a company, it must receive something that is not open to interpretation: A machine-readable Truth Layer.

This Truth Layer is created through structured data (JSON-LD, Schema.org)—not as an SEO add-on, but as the semantic backbone of the brand. It fulfills three functions that plain text cannot:

Eliminating Ambiguity: A date or a financial figure is no longer extracted from a sentence but processed as a typed piece of information.

Making Relationships Explicit: A Joint Venture is not a story to a machine; it is a relation in a graph. Entities are no longer guessed; they are recognized.

Providing Verifiable References: Structured data turns documents into referenceable objects. Evidence is not hoped for; it is technically enforced via web development standards.

AI Visibility is not created by optimizing text, but by architecting the truth.

Professional Monitoring: The Iceberg Model

Since LLMs compress probabilities rather than ranking documents, we cannot measure visibility just by watching the output. The output is merely the tip of the iceberg. Professional monitoring must use two complementary questioning techniques:

1. User Prompts (Surface Visibility)

Simulating reality: short, natural, incomplete.

They answer: Does the model find my company at all?

Value: They show how the model reacts in everyday scenarios.

Limit: They do not show why the model reacts that way.

2. Forensic Prompts (Foundation Visibility)

Aiming at the mechanism, not the output.

They are precise, structured, and evidence-oriented. They ask: Can the model name the source? Are the figures correct? Are roles resolved?

Value: They reveal mechanisms. And mechanisms are the only thing that matters for long-term AI Visibility.

You need both. User prompts check if you are spontaneously recognized (Attribution). Forensic prompts check if you are correctly understood and backed by evidence (Evidentiary Capability).

Conclusion: Rankings Are Dead – Long Live Architecture

The promise of “Ranking 1” has lost its object. In generative models, there is no list, no position, no up or down.

Instead of rankings, four structural properties count:

Stability of Attribution

Integrity of Entity Logic

Consistency of Evidence

Precision of Time and Numbers

When we replace “Ranking” with “Source Anchoring,” the entire logic shifts:

Away from competition signals -> Towards structural identity.

Away from measuring output -> Towards measuring semantic foundations.

AI Visibility is therefore not a communications discipline, but an architectural one. It belongs where systems are defined, not where campaigns are planned.

Structured data is not an add-on. It is the operational Truth Layer of a company. Only this layer enables generative models to speak consistently, verifiably, and precisely about a brand.

Let’s stop counting rankings. Let’s start anchoring truths. In the realm of LLMs, the winner is not the loudest, but the most structured.